Commits on Source (17)

-

William Waites authored

-

Pat Prochacki authored

-

Pat Prochacki authored

-

Pat Prochacki authored

-

Pat authored

-

Pat authored

-

Pat authored

-

Pat authored

-

Pat authored

-

Pat Prochacki authored

-

William Waites authored

-

William Waites authored

-

William Waites authored

-

Pat Prochacki authored

-

William Waites authored

-

William Waites authored

Showing

- README.md 48 additions, 0 deletionsREADME.md

- README.org 0 additions, 41 deletionsREADME.org

- cs101-csai-assignment3.md 45 additions, 0 deletionscs101-csai-assignment3.md

- cs101-csai-lec2.org 3 additions, 1 deletioncs101-csai-lec2.org

- cs101-csai-lec2.pdf 0 additions, 0 deletionscs101-csai-lec2.pdf

- img/idlewin.png 0 additions, 0 deletionsimg/idlewin.png

- img/pycharmwin.png 0 additions, 0 deletionsimg/pycharmwin.png

- img/pythoncmd.png 0 additions, 0 deletionsimg/pythoncmd.png

- img/vscodewin.png 0 additions, 0 deletionsimg/vscodewin.png

- lec2/__init__.py 0 additions, 0 deletionslec2/__init__.py

- lec2/letters.py 13 additions, 11 deletionslec2/letters.py

- marking/aabbcc.txt 1 addition, 0 deletionsmarking/aabbcc.txt

- marking/abcd.txt 1 addition, 0 deletionsmarking/abcd.txt

- marking/abcda.txt 1 addition, 0 deletionsmarking/abcda.txt

- marking/barlow.txt 32 additions, 0 deletionsmarking/barlow.txt

- marking/lec2 1 addition, 0 deletionsmarking/lec2

- marking/mark1.sh 39 additions, 0 deletionsmarking/mark1.sh

- marking/mark2.py 382 additions, 0 deletionsmarking/mark2.py

- marking/mark3.py 443 additions, 0 deletionsmarking/mark3.py

- marking/mary.txt 2 additions, 0 deletionsmarking/mary.txt

README.md

0 → 100644

README.org

deleted

100644 → 0

cs101-csai-assignment3.md

0 → 100644

No preview for this file type

img/idlewin.png

0 → 100644

34.3 KiB

img/pycharmwin.png

0 → 100644

123 KiB

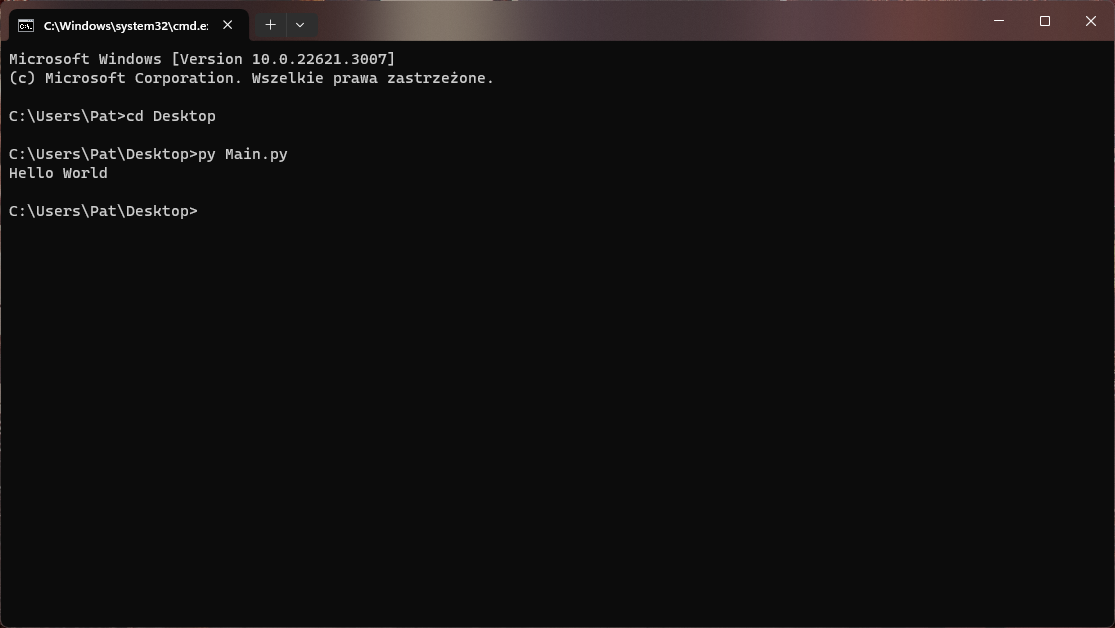

img/pythoncmd.png

0 → 100644

63.5 KiB

img/vscodewin.png

0 → 100644

46.2 KiB

lec2/__init__.py

0 → 100644

marking/aabbcc.txt

0 → 100644

marking/abcd.txt

0 → 100644

marking/abcda.txt

0 → 100644

marking/barlow.txt

0 → 100644

marking/lec2

0 → 120000

marking/mark1.sh

0 → 100755

marking/mark2.py

0 → 100644

marking/mark3.py

0 → 100644

marking/mary.txt

0 → 100644